“We shall neither fail nor falter; we shall not weaken or tire…give us the tools and we will finish the job.” – Winston Churchill

I don’t often blog about specific language features but over the past few weeks I’ve spoken to a few folks that did not know of the “yield” keyword and the “yield return” and “yield break” statements, so I thought it might be a good opportunity to shed some light on this little known but extremely useful C# feature. Chances are, you’ve probably indirectly used this feature before and just never known it.

We’ll start with the problem I’ve seen that plagues many applications. Often times, you’ll call a method that returns a List<T> or some other concrete collection. The method probably looks something like this:

public static List<string> GenerateMyList()

{

List<string> myList = new List<string>();

for (int i = 0; i < 100; i++)

{

myList.Add(i.ToString());

}

return myList;

}

I’m sure your logic is going to be significantly more complex than what I have above but you get the idea. There are a few problems and inefficiencies with this method. Can you spot them?

- The entire List<T> must be stored in memory.

- Its caller must wait for the List<T> to be returned before it can process anything.

- The method itself returns a List<T> back to its caller.

As an aside – with public methods, you should strive to not return a List<T> in your methods. Full details can be found here. The main idea here is that if you choose to change the method signature and return a different collection type in the future, this would be considered a breaking change to your callers.

In any case, I’ll focus on the first two items in the list above. If the List<T> that is returned from the GenerateMyList() method is large then that will be a lot of data that must be kept around in memory. In addition, if it takes a long time to generate the list, your caller is stuck until you’ve completely finished your processing.

Instead, you can use that nifty “yield” keyword. This allows the GenerateMyList() method to return items to its caller as they are being processed. This means that you no longer need to keep the entire list in memory and can just return one item at a time until you get to the end of your returned items. To illustrate my point, I’ll refactor the above method into the following:

private static IEnumerable<string> GenerateMyList()

{

for (int i = 0; i < 100; i++)

{

string value = i.ToString();

Console.WriteLine("Returning {0} to caller.", value);

yield return value;

}

Console.WriteLine("Method done!");

yield break;

}

A few things to note in this method. The return type has been changed to an IEnumerable<string>. This is one of those nifty interfaces that exposes an enumerator. This allows its caller to cycle through the results in a foreach or while loop. In addition, the “yield return i.ToString()” will return that item to its caller at that point and not when the entire method has completed its processing. This allows for a very exciting caller-callee type relationship. For example, if I call this method like so:

IEnumerable<string> myList = GenerateMyList();

foreach (string listItem in myList)

{

Console.WriteLine("Item: " + listItem);

}

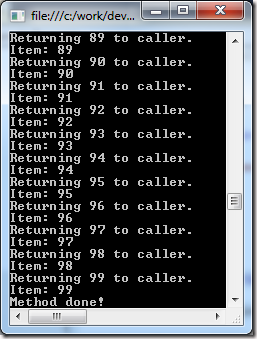

The output would be:

Thus showing that each item gets returned at the time we processed it. So, how does this work? Well, at compile time, we will generate a class to implement the behavior in the iterator. Essentially, this means that the GenerateMyList() method body gets placed into the MoveNext() method. In-fact, if you open up the compiled assembly in Reflector, you see that plumbing in place (comments are mine and some code was omitted for clarity’s sake):

private bool MoveNext()

{

this.<>1__state = -1;

this.<i>5__1 = 0;

// My for loop has changed to a while loop.

while (this.<i>5__1 < 100)

{

// Sets a local value

this.<value>5__2 = this.<i>5__1.ToString();

// Here is my Console.WriteLine(...)

Console.WriteLine("Returning {0} to caller.", this.<value>5__2);

// Here is where the current member variable

// gets stored.

this.<>2__current = this.<value>5__2;

this.<>1__state = 1;

// We return "true" to the caller so it knows

// there is another record to be processed.

return true;

...

}

// Here is my Console.WriteLine() at the bottom

// when we've finished processing the loop.

Console.WriteLine("Method done!");

break;

}

Pretty straightforward. Of course, the real power is that it the compiler converts the “yield return <blah>” into a nice clean enumerator with a MoveNext(). In-fact, if you’ve used LINQ, you’ve probably used this feature without even knowing it. Consider the following code:

private static void OutputLinqToXmlQuery()

{

XDocument doc = XDocument.Parse

(@"<root><data>hello world</data><data>goodbye world</data></root>");

var results = from data in doc.Descendants("data")

select data;

foreach (var result in results)

{

Console.WriteLine(result);

}

}

The “results” object, by default will be of type “WhereSelectEnumerableIterator” which exposes a MoveNext() method. In-fact, that is also why the results object doesn’t allow you to do something like this:

var results = from data in doc.Descendants("data")

select data;

var bad = results[1];

The IEnumerator does not expose an indexer allowing you to go straight to a particular element in the collection because the full collection hasn’t been generated yet. Instead, you would do something like this:

var results = from data in doc.Descendants("data")

select data;

var good = results.ElementAt(1);

And then under the covers, the ElementAt(int) method will just keep calling MoveNext() until it reaches the index you specified. Something like this:

Note: this is my own code and is NOT from the .NET Framework – it is merely meant to illustrate a point.

public static XElement MyElementAt(this IEnumerable<XElement> elements,

int index)

{

int counter = 0;

using (IEnumerator<XElement> enumerator =

elements.GetEnumerator())

{

while(enumerator.MoveNext()){

if (counter == index)

return enumerator.Current;

counter++;

}

}

return null;

}

Hope this helps to demystify some things and put another tool in your toolbox.

Until next time.