So, I was working with a customer who is writing their first application using LINQ. They had previously been bitten by the failure to close and dispose their SqlConnection objects. This is actually a fairly common problem and usually leads to those pesky SqlExceptions detailing that there are no connections left in the pool.

So, since LINQ to SQL abstracts out much of the direct database interaction, they were concerned about when the underlying SqlConnections are closed. I will walk through how I answered their question using a few of my favorite tools:

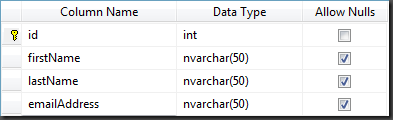

To start off, I created a simple SQL Table called Users:

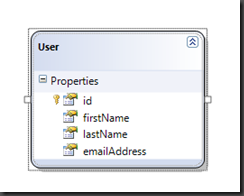

Then, I created a simple LINQ to SQL dbml:

Now that the plumbing is in place, I can write some simple code to return the data from the table and display it to the console window:

1: LinqConnectionSampleDataContext db =

2: new LinqConnectionSampleDataContext();

3:

4: Table<User> users = db.GetTable<User>();

5:

6: IQueryable<User> userQuery =

7: from user in users

8: orderby user.firstName

9: select user;

10:

11:

12: foreach (User user in userQuery)

13: {

14:

15: Console.WriteLine("ID={0}, First Name={1}",

16: user.id,

17: user.firstName);

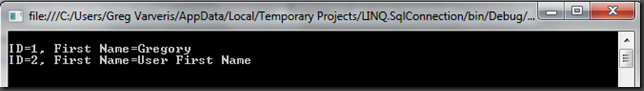

So, now when the application is executed, the output is as follows:

So, since Linq to Sql uses an underlying SqlConnection to do its work, we can set a breakpoint on the Close() method of that class in WinDBG. If you are unfamiliar with this great debugging tool, you can find a simple walkthrough on how to set it up here.

There are a number of ways to set a breakpoint in managed code in WinDBG. Here are the steps that I followed:

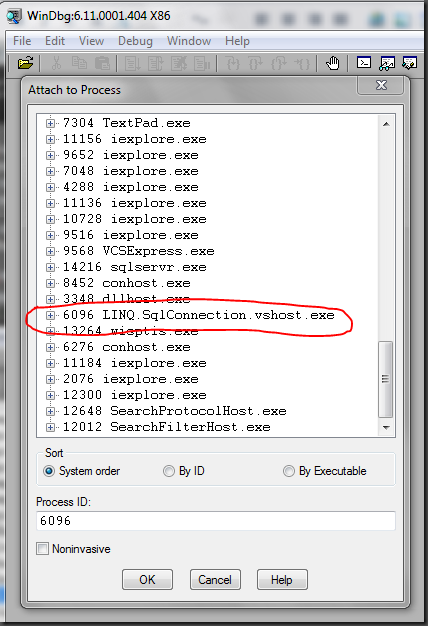

Step 1. Launch WinDBG and attach to the process in question.

Step 2. Load the SOS extension into WinDBG by executing:

.loadby sos mscorwks

Step 3. Set the managed breakpoint using the !bpmd command. For this step, the !bpmd command accepts a variety of parameters. Basically, you can pass it either:

I chose the latter method because it’s relatively quick and I knew exactly what I wanted. So, the syntax for this method is:

!bpmd <module name> <managed function name>

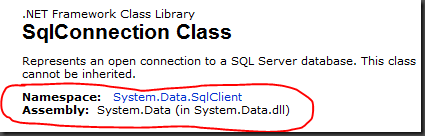

You can get the module name from visiting the SqlConnection page up on MSDN. On this page, we can get the module name and the namespace to the class:

From this, we can get both parameters necessary:

- Module Name: System.Data.dll

- Managed Function Name: System.Data.SqlClient.SqlConnection.Close

So, our command in WinDBG becomes:

1: !bpmd System.Data.dll

2: System.Data.SqlClient.SqlConnection.Close

Once you enter in this command, you should get output similar to the following in the WinDBG window:

0:014> !bpmd System.Data.dll System.Data.SqlClient.SqlConnection.Close

Found 1 methods...

MethodDesc = 544a0418

Setting breakpoint: bp 5455DC80 [System.Data.SqlClient.SqlConnection.Close()]

Step 4. “Go” in the debugger and wait for your breakpoint to be hit.

For this, the command is simply “g”.

0:014> g

Eventually, your breakpoint will be hit in the debugger and you should get output similar to the following:

Breakpoint 0 hit

eax=5457da68 ebx=04d7e9dc ecx=0185cd30 edx=018e56b0 esi=01870d80 edi=04d7e9a4

eip=5455dc80 esp=04d7e860 ebp=04d7e868 iopl=0 nv up ei pl nz na po nc

cs=001b ss=0023 ds=0023 es=0023 fs=003b gs=0000 efl=00000202

System_Data_ni+0xcdc80:

5455dc80 55 push ebp

Step 5. Print out the call-stack.

The command to print out the call stack in SOS and WinDBG is “!clrstack”:

0:008> !clrstack

This will print out the managed call stack, which turns out to be:

OS Thread Id: 0x1d70 (8)

ESP EIP

04d7e860 5455dc80 System.Data.SqlClient.SqlConnection.Close()

04d7e864 77e20586 System.Data.Linq.SqlClient.SqlConnectionManager

.CloseConnection()

04d7e870 77e20554 System.Data.Linq.SqlClient.SqlConnectionManager

.ReleaseConnection(...)

04d7e87c 77e1da35 System.Data.Linq.SqlClient.

ObjectReaderCompiler+ObjectReaderSession`1[...].Dispose()

04d7e888 77e1ddac System.Data.Linq.SqlClient.

ObjectReaderCompiler+ObjectReaderSession`1[...].CheckNextResults()

04d7e894 77e1df2c System.Data.Linq.SqlClient.

ObjectReaderCompiler+ObjectReaderBase`1[...].Read()

04d7e8a0 77e1ea2d System.Data.Linq.SqlClient.

ObjectReaderCompiler+ObjectReader`2[...].MoveNext()

04d7e8ac 004f1a12 LINQ.SqlConnection.Program.Main(System.String[])

So, if you’re having trouble parsing this, the take away here is that when you iterate through a Linq resultset and you get to the end, the ObjectReaderSession will automatically close the Connection to the database.

Now, this is a simple HelloWorld code sample for retrieving a result-set and there are obviously a number of ways to do the same thing. The customer’s code was closer to the following:

1: using (IEnumerator<User> enumerator =

2: context.ExecuteQuery<User>(sqlStatement).GetEnumerator())

3: {

4:

5: while (enumerator.MoveNext())

6: {

7:

8: // Do something here

9:

10: }

11:

12: }

In this situation, we get an IEnumerator<T> back from the database call and iterate through it. Now, this part is very important. If you are iterating through the result set to completion – the connection will be closed the same as the above. However, if you do something like this:

1: using (IEnumerator<User> enumerator =

2: db.ExecuteQuery<User>(sqlStatement).GetEnumerator())

3: {

4: while (enumerator.MoveNext())

5: {

6:

7: Console.WriteLine("ID={0}, First Name={1}",

8: enumerator.Current.id,

9: enumerator.Current.firstName);

10:

11: // Stop iterating after this record.

12: break;

13:

14: }

15:

16: }

Please note the “break” statement. Essentially, if you are NOT iterating through to completion, the call stack looks like:

OS Thread Id: 0x251c (11)

ESP EIP

0522e73c 5455dc80 System.Data.SqlClient.SqlConnection.

Close()

0522e740 77e20586 System.Data.Linq.SqlClient.SqlConnectionManager.

CloseConnection()

0522e74c 77e20554 System.Data.Linq.SqlClient.SqlConnectionManager.

ReleaseConnection(...)

0522e758 77e1da35 System.Data.Linq.SqlClient.ObjectReaderCompiler+

ObjectReaderSession`1[...].Dispose()

0522e764 77e1ea12 System.Data.Linq.SqlClient.ObjectReaderCompiler+

ObjectReader`2[...].Dispose()

0522e768 00691bde LINQ.SqlConnection.Program.Main(System.String[])

The connection will NOT be closed until you call Dispose() on the ObjectReader (IEnumerable) object. This means that if you happen to write some code without the Using… statement when returning data like this:

1: IEnumerator<User> enumerator =

2: db.ExecuteQuery<User>(sqlStatement).GetEnumerator();

3:

4: while (enumerator.MoveNext())

5: {

6:

7: Console.WriteLine("ID={0}, First Name={1}",

8: enumerator.Current.id,

9: enumerator.Current.firstName);

10:

11: // Stop iterating after this record.

12: break;

13:

14: }

The SqlConnection.Close() method will NOT be called. This is because you have full control over the lifetime of the IEnumerator<T> object and you should know when you are done with it.

Now, along those lines, you may be asking yourself – what if I did something like this:

1: LinqConnectionSampleDataContext db =

2: new LinqConnectionSampleDataContext();

3:

4: Table<User> users = db.GetTable<User>();

5:

6: IQueryable<User> userQuery =

7: from user in users

8: orderby user.firstName

9: select user;

10:

11:

12: foreach (User user in userQuery)

13: {

14:

15: Console.WriteLine("ID={0}, First Name={1}",

16: user.id,

17: user.firstName);

18:

19: break;

20: }

Where you break before you iterate through to completion? In that situation, Dispose() will still be called on the IQueryable<T> object. How? Because of a compile-time optimization we do. We insert a finally statement after the userQuery has been used. This compiles down to (in IL):

try{

L_005d: br.s L_0084

L_005f: ldloc.s CS$5$0002

L_0061: callvirt instance !0...get_Current()

L_0066: stloc.3

L_0067: ldstr "ID={0}, First Name={1}"

L_006c: ldloc.3

L_006d: callvirt instance int32 LINQ.SqlConnection.User::get_id()

L_0072: box int32

L_0077: ldloc.3

L_0078: callvirt instance string LINQ.SqlConnection.User::get_firstName()

L_007d: call void [mscorlib]System.Console::WriteLine(string, object, object)

L_0082: br.s L_008d

L_0084: ldloc.s CS$5$0002

L_0086: callvirt instance bool [mscorlib]System.Collections.IEnumerator::MoveNext()

L_008b: brtrue.s L_005f

L_008d: leave.s L_009b

}finally{

L_008f: ldloc.s CS$5$0002

L_0091: brfalse.s L_009a

L_0093: ldloc.s CS$5$0002

L_0095: callvirt instance void [mscorlib]System.IDisposable::Dispose()

L_009a: endfinally

}

L_009b: ret

.try L_005d to L_008f finally handler L_008f to L_009b

The text in red is my emphasis. So, the moral of this story, when you take control of the data yourself, you MUST call dispose on the IEnumerable<T> object when you are done with it.

Enjoy!