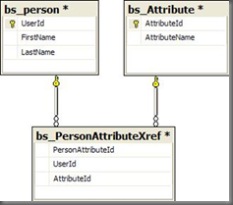

If you've been a developer for any period of time, you have probably come across this question. If you're given a one-to-many relationship in a table, what is the best way to insert those values into a database. For example, let's say that you had a Person table and an Attribute table. Each person can have more than one attribute associated with them, hence the 1-to-Many relationship. Your database diagram is probably something that looks like this:

So, if given a Person and a list of Attributes for that person, what is the best way to insert rows into the PersonAttributeXref table? Well, if this is the first time that you've done something like this, you probably came up with something like this:

- Create a Stored Procedure that accepts a UserId and AttributeId value and then insert one row into the PersonAttributeXref table.

- For each Attribute selected, you simply loop through them and call out to that Stored Procedure once for each item you would like to insert.

So what is wrong with this method? Not a whole lot if you are only inserting a few (1 to 3) rows into the PersonAttributeXref table. However, when inserting more than those few rows into the table, you will begin to notice a performance hit. Why? Well, if you are using something like the Enterprise Library to perform your inserts, your code probably looks a little something like this:

Public Sub InsertAttribute(ByVal userId As Int32, ByVal attributeId As Int32)

Dim db As Database = DatabaseFactory.CreateDatabase()

Using dbCommand As Common.DbCommand = _

db.GetStoredProcCommand("USP_BS_INSERT_PERSONATTRIBUTEXREF")

db.AddInParameter(dbCommand, "@UserId", DbType.Int32, userId)

db.AddInParameter(dbCommand, "@AttributeId", DbType.Int32, attributeId)

Try

db.ExecuteNonQuery(dbCommand)

Catch ex As SqlClient.SqlException

'// Insert error handling here.

End Try

End Using

End Sub

The problem with something like this is that each time you call out to this method, a new connection is established with the database and the insert is then completed. The act of establishing a connection out to the database is very expensive - even using Connection Pools. In-fact, during some tests that I ran locally, if you are calling out to this method 100 times - it will probably take you in excess of about 937,500 ticks to complete the transaction. You also don't have the option of rolling back the rows inserted if something fails.

Well, chances are, you've probably noticed this issue and have tried to overcome it a variety of ways. In-fact, the next thing that I've seen people try is to concatenate the attributeId's into a delimited string and then pass that string to a single stored procedure:

Dim attributeIdList As New System.Text.StringBuilder()

For i As Int32 = 1 To MAXINSERTS

If attributeIdList.Length > 0 Then

attributeIdList.Append(",")

End If

attributeIdList.Append(i.ToString)

Next

And then in the Stored Procedure, you perform the String parsing using a simple UDF like that which you can find here and then you perform the insert there - all wrapped in a nice little transaction. If you have done something like this successfully, you have probably seen transaction times somewhere in the neighborhood of 625,000 ticks for 100 inserted rows. Wow! That's a nice a performance boost, right? Yes, it is - however, I've always been a firm believer that the SQL Server is no place to be concatenating and splitting strings. Just because you can do it, doesn't mean it should be done. In-fact, I'd argue that splitting strings and concatenating strings makes your procedure a lot less supportable than performing similar actions in .Net code.

Faced with these two options, I'm likely to propose a third option. In .NET, though, you have the option of using Transactions (feel free to look here or here or even here for a description). Transactions in .NET work the same way as Transactions in SQL. Basically, you wrap one or more operations in a Transaction and if all operations succeed, then the transaction is committed; if one or more fail, then you Roll it back. In the context of this example, though, how would it work? Well, assuming you have an array of AttributeId's, your code would probably look like this:

Public Sub InsertAttributeWithTransaction(ByVal userId As Int32, _

ByVal attributeIdList As Int32())

Dim db As Database = DatabaseFactory.CreateDatabase()

Using dbCommand As Common.DbCommand = _

db.GetStoredProcCommand("USP_BS_INSERT_PERSONATTRIBUTEXREF")

db.AddInParameter(dbCommand, "@UserId", DbType.Int32, userId)

db.AddInParameter(dbCommand, "@AttributeId", DbType.Int32, -1)

Using connection As Common.DbConnection = _

db.CreateConnection()

connection.Open()

Dim transaction As Common.DbTransaction = _

connection.BeginTransaction()

Try

For i As Int32 = 0 To attributeIdList.Length - 1

dbCommand.Parameters("@AttributeId").Value = _

attributeIdList(i)

db.ExecuteNonQuery(dbCommand, transaction)

Next

' Commit the transaction

transaction.Commit()

Catch ex As Exception

' Rollback transaction

transaction.Rollback()

End Try

connection.Close()

End Using

End Using

End Sub

As you can see, this is pretty nice and straight-forward. You instantiate the connection, create the transaction, process the inserts and then depending upon the result of the operations, you either Commit() or Rollback() the transaction. So, what is the benefit of this? Well, aside from the obvious readability (and consequently supportability) improvements - you also get a speed improvement too! In-fact, for the same 100 rows that I inserted previously using the first or second approach, the total time to complete the transaction here is between 156,250 ticks and 312,500 ticks. That compared with with the 937,500 ticks in option 1 and 625,000 ticks in Option 2 - represents an incredible speed improvement - and that's only for 100 rows. Which, I'd imagine, is the high-end in UI defined cases.

If anyone has experienced issues with using .NET transactions or performance foibles, I'd love to hear about them. Please feel free to either comment here or e-mail me at greg at samurai programmer dot com.